Welcome to KDD 2020 Tutorial on Online User Engagement: Metrics and Optimization.

- Date: August 23th, 8:00AM - 12:00PM Pacific Time

- Location: Virtual Conference

See all other KDD 2020 Tutorials.

You could also check out previous editions of similar tutorials:

- WWW 2019 Tutorial on Online User Engagement: Metrics and Optimizaiton

- WSDM 2018 Tutorial on Metrics of User Engagement

- WWW 2013 Tutorial on Measuring User Engagement

Motivation

In the online world, user engagement refers to the quality of the user experience that emphasizes the positive aspect of the interaction with an online service and, in particular, the phenomena associated with wanting to use that service longer and more frequently. User engagement is a key concept in the design of online services, as successful applications are not just used, but are engaged with. Users invest time, attention, and emotion with services they use. How to measure user engagement and being able to drive it becomes a key element for evaluating the success of any online services, from news to e-commerce sites, as it informs on the understanding of user needs and expectations, system design and functionality.

User engagement is a multifaceted, complex phenomenon, and as such has given rise to a number of approaches for its measurement. Common ways of measuring user engagement include: self-reporting, e.g., questionnaires; observational methods, e.g., desktop actions; and online analytics, which employ online behavior metrics that assess users’ depth of engagement. These approaches represent various trade-offs between the scale of data analyzed and the depth of understanding. In this tutorial, we focus on the later, large-scale online analytics. Within this approach, the most common way that engagement is measured is through various proxy measures of user engagement. Standard metrics include number of page views, number of unique users, dwell time, bounce rate, click-through rate, and return rate. We will review these metrics, and discuss what they measure, their advantages and drawbacks. We will provide extensive details on the appropriateness of these metrics to various types of online services.

Once metrics are identified and measured, it becomes a challenge to derive a mechanism to optimize them. From a machine learning perspective, two types of approaches are exploited to drive metrics, directly optimizing a metric when it can be formalized easily in a mathematical form, or indirectly through some surrogate objective functions. In advanced forms, we optimize them in more complicated machine learning paradigms such as reinforcement learning. From an experimental design perspective, certain metrics can be easily observed and improved during a period of time of an online A/B experiment in terms of several weeks while the change of other metrics, especially long-term ones, can be difficult or even never observed and improved directly through experiments. In this tutorial, we will discuss these aspects and explore new research directions.

Throughout the tutorial, we will use applications in areas we have extensive experiences, news, search, entertainment, and e-commerce to demonstrate how online user engagement can be measured and optimized.

Presentors

Here are short biographies for two presentors of the tutorial.

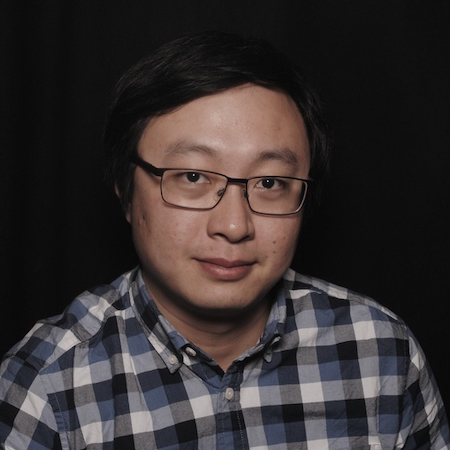

Liangjie Hong

Liangjie Hong is a Director of Engineering, AI at LinkedIn Inc, managing a group of applied researchers and machine learning engineers to deliver cutting-edge scientific solutions for job search and recommendation. Previously, he was a Director of Engineering at Etsy Inc. leading data science and machine learning efforts on Search and Discovery, Personalization & Recommendation and Computational Advertising. Before that, he was a Senior Manager of Research at Yahoo Research, leading science efforts for Personalization and Search Sciences. Prior to Yahoo Research, he obtained his Ph.D. in Computer Science from Lehigh University in 2013. Liangjie has given numerous technical talks at academic conferences as well as industrial meetings. He also co-founded User Engagement Optimization Workshop, which has been held in conjunction with CIKM 2013 and KDD 2014.

Liangjie has extensively published papers in optimizing user engagement in the context of personalization and recommendation systems, including designing metrics, developing machine learning methodologies as well as novel models. He has served as program committee members on all major applied machine learning and data mining conferences including KDD, WSDM, WWW, SIGIR, EMNLP and ICML.

Mounia Lalmas

Mounia Lalmas is a Director of Research at Spotify, and the Head of Tech Research in Spotify’s Personalization mission, where she leads an interdisciplinary team of research scientists across Spotify’s four R\&D Hubs, Boston, London, New York and Stokholm, working on personalization and discovery. Before that, she was a Director of Research at Yahoo, where she led a team of researchers working on advertising quality for Gemini, Yahoo native advertising platform. She also worked with various teams at Yahoo on topics related to user engagement in the context of news, search, and user generated content. She also hold an Honorary Professorship at University College London. Prior to this, she held a Microsoft Research/RAEng Research Chair at the School of Computing Science, University of Glasgow. Before that, she was Professor of Information Retrieval at the Department of Computer Science at Queen Mary, University of London. %She co-led the Evaluation Initiative for XML Retrieval (INEX), a large-scale project with over 80 participating organizations worldwide, which was responsible for defining the nature of XML retrieval, and how it should be evaluated.

Since 2011, her work has focused on studying user engagement in areas such as native advertising, digital media, user generated content, search and lately in music. She has given numerous talks and tutorials on these and related topics. She is regularly a senior programme committee member at conferences such as WSDM, WWW, SIGIR and KDD (Industry track). She was co-programme chair for SIGIR 2015, WWW 2018 and WSDM 2020. She is also the co-author of a book written as the outcome of her WWW 2013 tutorial.